Creativity AI #14: Weekly AI updates for creativity and productivity

Midjourney Office Hours (2025-04-02), Midjourney V7, Runway Gen-4, Perplexity PLEX bot, ElevenLabs Actor Mode, Learn and stay competitive in your job, Free Google Gemini 2.5 Pro

Creativity AI is a new publication that brings together interesting articles and recent developments in the AI world related to creativity and productivity.

Midjourney Office Hours (2025-04-02)

The Midjourney V7 launch will occur within the next 24 hours.

Here's what I know as of this writing:

At launch, there will be two speed modes: standard and draft mode.

Standard mode focuses on quality and is computationally intensive. It must be optimized before launch; otherwise, there will not be enough servers. The optimization pipeline currently includes three options. The goal is to have at least one running for launch.

Draft mode is more conversational, faster, and provides a tenfold better experience. Once you've decided what you want, continue with Standard mode. The mode works consistently well and has the potential for the developers to go extremely fast - so fast that it is impossible to adjust or moderate the prompt and requires a different type of interface.

"More conversational" is not a chatbot interface. It is iterative, and the bot will respond with the generated image.

Depending on your application, the output can be "pretty good" and you may only want to use the draft. It's basically real-time or "prompting on the fly."

V7 will be more coherent, with a different aesthetic and texture.

It has improved prompt adherence and understanding over the previous version.

It supports Sref and Personalization.

The Remix, multi-prompt, style explorer, and Sref random features will not be available at launch.

It may have a warmer color (yellow).

It will work with short text.

Midjourney intends to have a weekly release cycle for the next four weeks as more features are coming:

New features include Omni-reference (better Cref), a new Upscaler, and "Super Slow Modes" that allow you to experiment in Draft Mode before moving to Standard Mode.

Turbo and Relax modes are coming.

Draft blending mode that can be super fast.

Better editor.

Next-generation Sref and Moodboard at some point.

Transparency is unlikely to arrive soon.

Half of the development's resources will be allocated to what the community wants.

Niji criticizes the fact that anime is being westernized in the main model.

The video feature is currently in training and should be ready by the end of the month. The launch date is presently unknown. Midjourney wants to make its video both accessible and affordable. There might be a video rating party next month.

Two secret projects will be revealed in the coming months.

David attempted to run a V7 demo during Office Hours but was unable to do so due to server issues.

Runway

Runway recently introduced its latest Gen-4 video model. It is considered a significant update for Runway because it has been quiet for a long time while competitors have been releasing new features one after the other.

The new model appears impressive based on its marketing messages: improved video quality, consistent styles/subjects/locations, reference image, and so on. Have a look at the official announcement.

Feature showcase video and link:

Video link to Runway Academy for more technical explanation.

I tried it. In comparison to Gen-3, video quality and prompt adherence have greatly improved. I also like that it accepts images in a variety of aspect ratios, which widens its use cases.

The reference image for subject consistency is not yet available. I guess developers are now scheduling updates? Because in the competitive AI industry, the AI company must continue to release at least one new feature every week or two, or people will forget about it. Anyway, I can't wait for the reference image to see what I can come up with in this imaginary yet fascinating virtual world.

Why does this matter?

It's great to see Runway "active again". I hope it regains its position as the most popular AI generator following the release of its highly successful Act-One feature.

If Runway delivers on its promises in the marketing materials, it will be a highly versatile and powerful AI video generator, especially when combined with Midjourney, ChatGPT-4o, and Ideogram.

This is a short video I generated using Runway Gen-4 using a Midjourney image. Sound effects added using ElevenLabs.

Perplexity

Perplexity has just launched its new Discord bot, PLEX, which allows users to ask questions and create memes.

You can ask a question by saying "@plex" and then typing it. This is a simple feature. There are no settings or parameters to adjust.

This is the meme I made with the PLEX bot on Discord.

Perplexity also updated its interface, making searching more straightforward. Suppose you're new to AI and want to experiment with different AI models. Perplexity is a good option because you can subscribe to one platform and try out different AI models before committing to a specific AI model.

Why does this matter?

Perplexity has its own reasoning model, R1 1776, which is another option for using a reasoning model.

New models are constantly being introduced while older models are phased out. The list also includes an overview of the latest models on the market. However, not everything is always updated and included immediately for a variety of reasons. For example, Google Gemini 2.5 Pro (Experimental) has not yet been added to the list.

ElevenLabs

One of the drawbacks of using AI-generated voice to narrate a story is a lack of emotion, particularly in action scenes where a character expresses strong emotions such as fear, anger, sorrow, or frustration. In those circumstances, the AI voice's pace may not perform as expected.

ElevenLabs' new Actor Mode now lets you control the delivery of your generated audio, including pacing, intonation, and emotion, by using your own voice as a performance guide. The feature can be accessed via Studio.

This is the script for demonstrating the utility of this new feature. Compare the effect of Standard Mode (default AI voice) and Actor Mode (human-guided AI voice - in this case, I'm the actor!) Clap, please...!

Standard Mode

Please... please stop. I'm begging you!

Actor Mode

Please... please stop. I'm begging you!The result:

Why does this matter?

The ability to control the pace and expression of emotion is a significant step forward in using AI to narrate stories and create voiceovers. It simplifies voice editing for AI voices and produces impressive results. (And you have a chance to act using your voice!)

Learn AI and stay competitive in your job

Anthropic recently released a report analyzing how users use its Claude 3.7 Sonnet model's extended thinking mode. The study covered one million conversations, finding it's mainly used in technical and creative problem-solving. Computer and information research scientists lead the pack at nearly 10%, followed by software developers at 8%. Creative roles like multimedia artists and video game designers also show significant usage.

Augmentative use cases like learning or iterating on an output remain dominant at 57%. Whereas automation like asking the model to directly complete a task or debug errors, continues to play a crucial role. Professions like copywriters iterate tasks extensively, whereas translators prefer automation. Overall, the extended thinking mode enhances various occupations.

Why does this matter?

If your job requires technical and creative problem-solving, invest in learning AI to remain competitive in a rapidly changing job market. Your peers may be ahead of you in using AI, but you can catch up if you continue to learn one step at a time.

Other news

Google has made its latest Gemini 2.5 Pro (Experimental) model available for free to all users. Previously, it was a paid feature. The new model is said to be ideal for complex tasks. If you want to try it out, log into your Gemini account and select it from the dropdown list.

OpenAI has launched its Academy, which provides extensive educational resources. Check it out here.

Claude has introduced a specialized version for higher education institutions that focuses on teaching, learning, and administration.

Inspiration

I hope you're not sick of Ghibli Studio's style, which has spread like wildfire on social media. (Coz I'm).

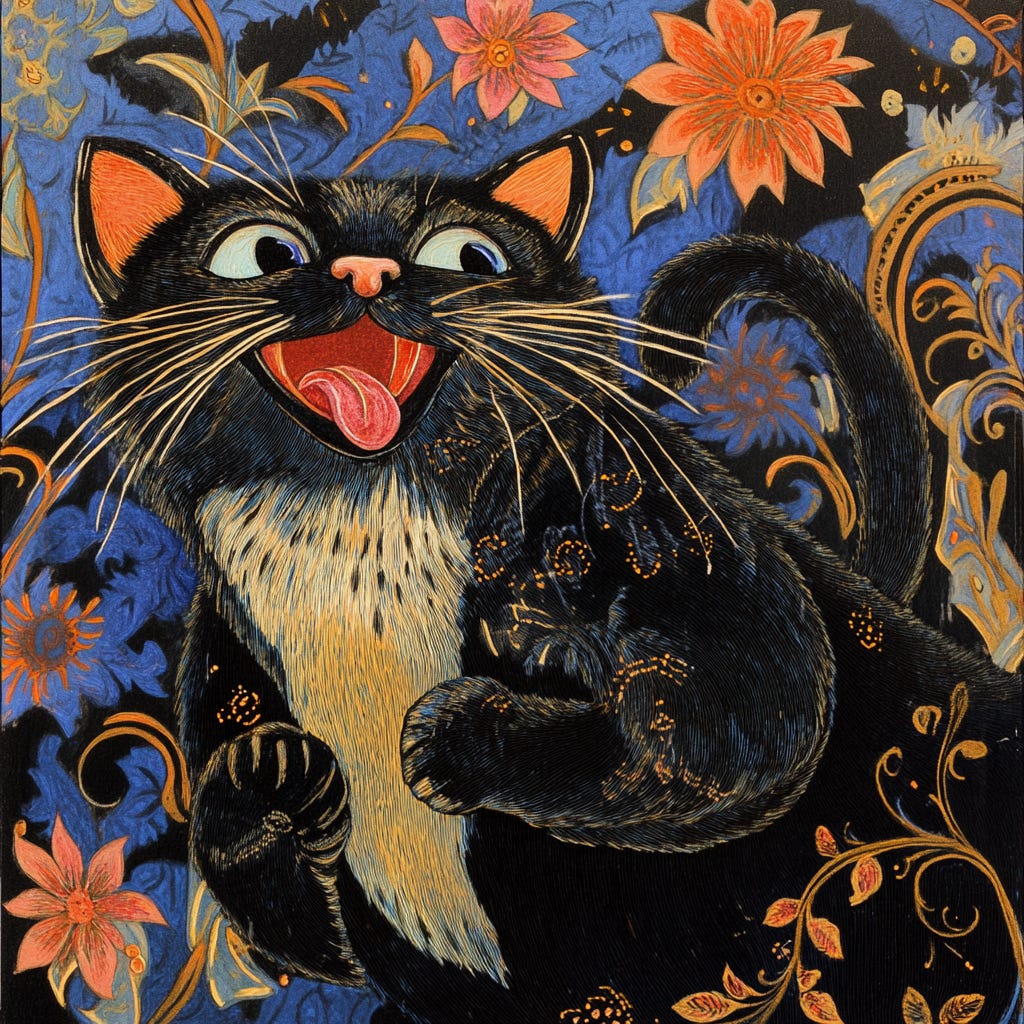

So, I look elsewhere to discover the vast ocean of artistic style. And I just learned something new today: Reverse painting. It is also known as reverse glass painting, and it is a creative technique in which an image is painted on one side of a transparent glass surface while the finished artwork is viewed from the opposite side.

Michalina Janoszanka (1889-1952) is a well-known artist who specialized in reverse painting. I used some of her public domain artworks to create a moodboard style. What do you think about the results?

Recent articles

Free Geeky Animals' Sref code: 115346132

Cover prompt: . --ar 91:51 --sref 115346132 --v 6.1

The cover image was created in Midjourney. The text was added with ChatGPT-4o. Interestingly, ChatGPT changed the overall aesthetic to focus more on the character. The image was then imported back into Midjourney Full Editor for further editing (e.g., fix the fingers, blur the reflection, etc.).

The result? Look at the top image :-)

I hope you like this article!

Thank you for reading and happy creating!