Creativity AI #24: Major changes and Updates

News about AI art, Changes in Geeky Curiosity, Prompt Play, Inspiration

Creativity AI is a free weekly publication that brings together interesting articles and recent developments in AI art and creativity.

You can also read this newsletter on the website.

News about AI art

Midjourney's got some exciting stuff brewing, and I wanted to give you the scoop on what's heading our way. Let me break down the 3 main developments that caught my attention.

1. Enhanced Sref feature drops this week

The new Sref feature is rolling out within days, and it's bringing some cool upgrades. If you're already using Sref codes, here's what you need to know: add --sv 4 or --sv 5 to keep them working with the new system.

The update includes Sref random functionality and enhanced moodboard features. Plus, the new Sref URL system should work much more smoothly than before.

2. Video model finally arrives soon (With limitations)

Midjourney's being smart about their server capacity, so the initial video model launch will likely be limited to yearly, standard, or mega plan users. The feature works on V4-V7 and Niji images but only supports image-to-video conversion for now.

Interestingly, they're planning a Niji-specific video model too. More video features like video extension are coming after launch. You can actually check out early video samples at their video rating party: https://www.midjourney.com/rank-video

3. What's next on the roadmap

V7.1 is coming after the video release, promising better coherence by incorporating video model learnings. A Style Explore feature will follow the new Sref launch.

However, don't hold your breath for Omni-Reference improvements – they're facing some technical challenges there.

These updates show Midjourney's focus on refining existing tools while expanding creative possibilities. Its future is bright.

Here’s a video that I captured during my rating party session: (you can see that camera can move and multi-character scene looks good!)

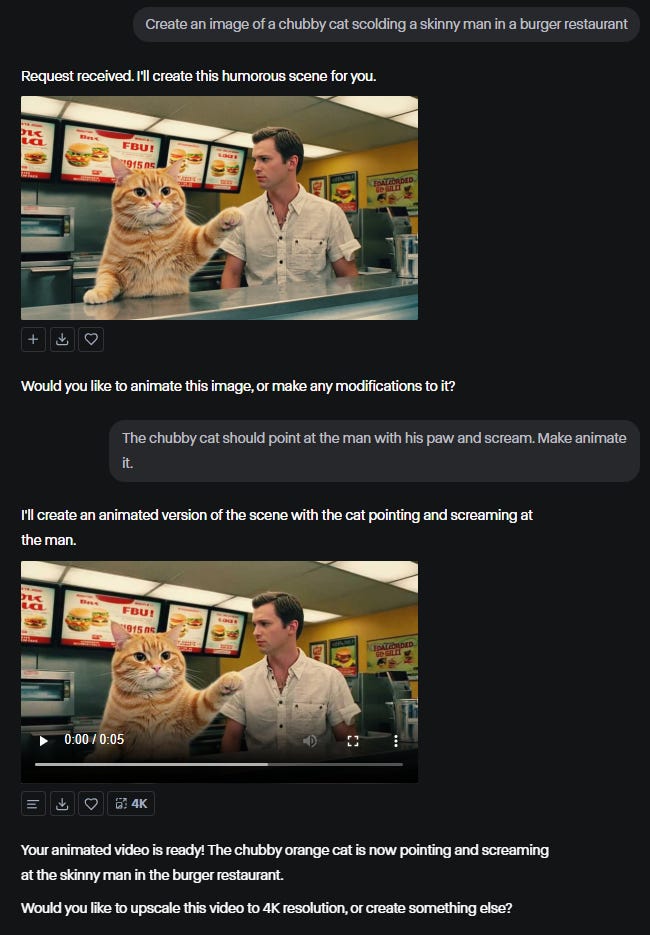

Runway has just casually activated its Chat Mode Beta, allowing users to chat with its bot and generate images or videos. See my interaction with the new interface. It’s a bit slow, though. Slower than ChatGPT for sure.

What I created with Runway text-to-image.

TopazLabs just dropped Bloom, a new upscaling tool that takes AI-generated images up to 8x their original size. While Midjourney gives you two Upscaler options (Subtle and Creative), Bloom offers five creativity levels: Subtle, Low, Medium, High, and Max. It also includes a detail-adding feature similar to Midjourney's Retexture. The pricing starts at $7.49 monthly or $89.90 annually, with a free plan available.

I'm on the fence about this one. I already own a Topaz Gigapixel, but I seldom use it. Gigapixel handles my upscaling needs just fine, and the results work well enough for stock photo sales. Then, what's the reason for spending money on Bloom? I'm not sure.

To learn more, go to Bloom’s website.

Microsoft launched Bing Video Creator, but there's a catch – it's mobile-only. No desktop version yet, which feels odd in 2025, especially for AI-video creators. The tool uses OpenAI's Sora for text-to-video generation. My reaction? Pretty underwhelming. Probably, they're not competing with established desktop tools yet. Launching mobile-only feels like missing the mark. Also, text-to-video is not as exciting.

Read more about it here.

The Pudding released a fascinating YouTube video called "The Loneliness Epidemic" that uses data visualization to explore how people are becoming increasingly isolated. Watching it was a genuine wake-up call about prioritizing real human connections over digital distractions (including AI experiments).

The video reminded me to invest more quality time with family and friends rather than getting lost in endless AI tinkering. This is exactly the kind of content I love. Thoughtful, data-driven storytelling that makes you pause and reflect.

Kling 2.1 rolled out two weeks ago, and I'm still blown away by the video quality. If you're loyal to Runway but haven't seen Kling's announcement video, you're missing out.

I've created several clips using Kling 2.1 Master, and it's the best AI video generation I've experienced. The results are genuinely impressive.

The only downside? The pricing will make your wallet weep. At 100 credits for just 5 seconds of video, costs add up quickly. But when the quality is this good, you almost forget about the financial sting. Well, at least until you are running out of credits.

If you are interested to subscribe to Kling, you can use my promo code here to get a boost of 50% Bonus Credits for the first month.

Read the Kling 2.1 release note here.

Watch it here

And here's a video clip that mesmerizes me.

It was created with Kling 2.1 Master in a single try.

Kling 2.1 Master output

Update from Geeky Curiosity

I'm making a big change around here.

Geeky Curiosity is going all-in on AI art generation from now on.

You'll see focused coverage of Midjourney, Ideogram, ChatGPT, Runway, Kling, and whatever cutting-edge visual AI tools emerge next. Images, videos, and all the creative stuff that makes your eyeballs happy.

What about text-based AI like Perplexity, Claude, and Gemini? They are moving to a brand-new publication launching in the coming months.

This focused approach lets me pour all my energy into the visual AI content you actually want to read. No more trying to juggle everything at once. Just pure, concentrated AI art goodness delivered straight to your inbox.

Read more about the changes in these articles:

About: https://geekycuriosity.substack.com/about

The content: https://geekycuriosity.substack.com/p/what-youll-find-at-geeky-curiosity

The main AI tools for creating images and videos that are covered in this newsletter:

https://geekycuriosity.substack.com/p/my-go-to-ai-tools-for-creating-images

Here’s a poll. Do let me know what do you think about these new changes.

Prompt Play

This Prompt Play is totally unfair. ChatGPT-4o is well-known for its capability to generate comic panels, but sometimes, you need to test the obvious to confirm what you assume.

ChatGPT-4o

Create a 4-panel comic strip featuring a lazy cat named Tucker and his owner Jenny. The setting is their living room. The tone is light and funny. Write dialogue and describe the visuals for each panel.ChatGPT-4o delivers exactly what you'd expect: complete comic strips with matching text and visuals. The catch? Its distinctive "ChatGPT aesthetic" screams AI-generated from a mile away. Anyone familiar with AI tools will instantly know that you are using ChatGPT to generate it.

Ideogram V3

A a 4-panel comic strip featuring a lazy cat named Tucker and his owner Jenny. The setting is their living room. The tone is light and funny. Write dialogue and describe the visuals for each panel.Ideogram V3 takes a different approach. While it can't generate coherent text, it fills speech bubbles with creative gibberish that you'll need to replace manually. Also, it is 3-panel instead of 4. But its varied aesthetic styles make it much harder to identify as AI-generated graphics.

Midjourney V7

A a 4-panel comic strip featuring a lazy cat named Tucker and his owner Jenny. The setting is their living room. The tone is light and funny. Write dialogue and describe the visuals for each panel. --profile vpxk9od jqfuczz --v 7Midjourney V7 struggles with text generation but excels at creating visually stunning comic panels with lots of aesthetic controls (i.e. Style References, Personalization, Moodboard etc). You'll get beautiful layouts and compositions, but you'll need to add the text yourself.

The verdict

For quick, casual comics where AI detection doesn't matter, ChatGPT-4o wins hands down. It's effortless and functional, perfect for internal presentations or personal projects.

However, if you need comics that don't immediately scream "AI-made," Ideogram and Midjourney require more work but offer better aesthetic disguises. The manual text addition becomes worthwhile when authenticity matters.

I assume the gap between the AI art generators won't last forever. Image generators are rapidly evolving, and ChatGPT's text-generation advantage is likely to become the standard across platforms soon.

Inspiration

Shutter speed controls how long your camera captures light. Think of it like blinking – a quick blink captures a sharp moment while keeping your eyes open longer allows movement to appear as a beautiful blur.

I use Kodak Portra 400 as the common film to try out slower shutter speed effects in Midjourney.

At 1/60 second: Subtle blur adds life to moving subjects.

Drop to 1/30 second: Motion blur becomes pronounced. Moving cars leave gentle streaks.

Slow to 1/15 second: Light trails from headlights become visible in some images per grid.

At 1/4 second: Images take on a dreamy quality.

Push to 10 seconds: Creative explosion time! Car headlights become rivers of light; busy streets transform into flowing energy.

Conclusion

Midjourney understands shutter speed. However, the degree of motion blur and light trails is not directly proportional to the numerical value of shutter speed.

That means that some photographs with a faster shutter speed (1/15 second) may appear identical to those with a slowest shutter speed of 10 seconds.

Recent articles

Related article

Free Geeky Animals' Sref code: 459085650

Prompts are created using MJ Simple Prompt Generator (+ my brief idea)

Cover prompt: . --ar 16:9 --sref 459085650 --profile r3snb8i --sv 4 --v 7

I hope you like this article!

Thank you for reading and happy creating!