Creativity AI #10: Your weekly updates on creativity and productivity

Midjourney Office Hours (2025-03-05), Perplexity, ChatGPT-4.5, When robots working so well together, ElevenLabs Scribe

Creativity AI is a new publication that brings together interesting articles and recent developments in the AI world related to creativity and productivity.

Midjourney Office Hours (2025-03-05)

Midjourney V7 could be released in two weeks. But there's no guarantee.

What to expect from V7 (as mentioned): Omni-reference, improved Upscaler (4x, new type of Upscaler), faster, compatible with existing features (e.g., profile code), and aesthetic updates.

V7 may be released in alpha mode, and things could change drastically overnight.

Relaxation (free, fast image generation) will continue until V7 is released.

There are still a lot of things to figure out with video. Its current state is still not exactly what the developers want. There is currently no information about the release date.

David wants to make a real-time drawing tool for those who enjoy drawing.

75% of Midjourney users use it for fun. Therefore, Midjourney does not want to make complex tools. Over time, the natural progression of these tools will prove useful to professionals/workers.

Midjourney's philosophy is to make the tool fun before it becomes productive.

Many people think that Midjourney is for creating art. David disagreed. Midjourney is for imagination - like a vehicle that allows users to amplify their imagination (visually).

David also uses Perplexity to search. He also likes DeepSeek.

Perplexity

OpenAI has released its latest model, GPT-4.5, to a limited number of countries. What if the GPT-4.5 is not yet available, and you are eager to try it out?

Well, Perplexity has added GPT-4.5 to its AI model list! There's no need to wait for OpenAI; instead, use Perplexity to see if it works for you. The limitation is that users can only use it ten times per day.

Why does this matter?

I still prefer Perplexity Deep Research and Claude Extended Thinking Mode. There are some online debates about GPT-4.5, with some claiming that the new model falls short of their expectations. I’m unsure about that. Just like any other AI tool, you must try it for yourself and make your own judgment.

When robots cooperate well, some people freak out

I show video clips of bots talking and working harmoniously to my friends and coworkers. Their responses are similar. "Wow... nice... we are doomed." "The robots will take over now".

Haha.

When we use Perplexity, Chatgpt, Claude, and other AI models, the bot does not appear to be particularly threatening. Probably because they're virtual. They are our digital assistants, helping us in completing tasks quickly so that we can move on to other things.

But when the bots get physical and collaborate with other bots, it's a different story. Some of us may feel marginalized and unable to make significant contributions and fear being replaced.

I'm not sure when they'll build enough robots to take over the physical world. I suppose it will take time. More importantly, one must learn AI and collaborate effectively with virtual and physical bots so as to be better prepared for the future.

The famous adage "AI won't take your job, but people who know how to use AI will" is true. The time is now to learn more about AI to keep up with technology and remain competitive in the economy.

ElevenLabs

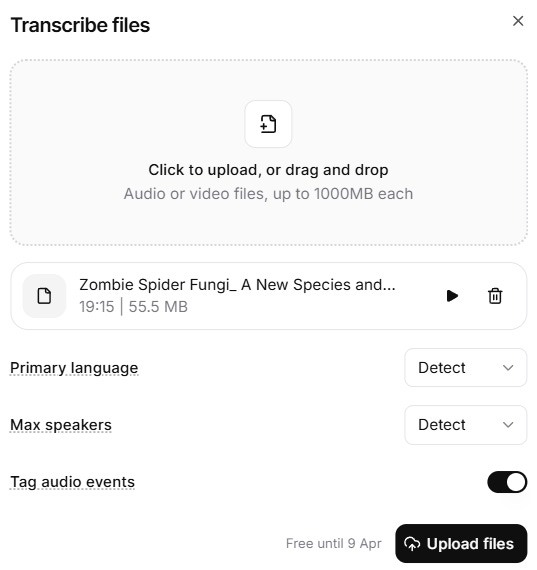

ElevenLabs now offers Scribe, a speech-to-text model; however, on the website, the feature is referred to as "Speech to text". It supports 99 languages, speaker diarization (the separation and identification of different speakers in an audio clip), character-level timestamps, and non-speech events like laughter.

I attempted to transcribe the podcast generated with NotebookLM Plus. Perhaps the model was overly sensitive, as it detected four speakers (there are only two). It accurately captured non-speech events like "mm-hmm" and a brief pause when the speaker took a breath.

After exporting the transcribed text, the speech's timestamps can be found in the subtitle file (.srt).

Why does this matter?

The speech-to-text feature is useful for video creators to create subtitles, but many competitors also provide this feature. The question is, what other uses does the generated subtitle have besides its intended use? Will there be a feature that allows AI to read subtitles at the same pace as the original speaker for voiceover or dubbing?

If you're using Runway Act-One to create a speaking AI character in the video, drag the file into Scribe to generate a subtitle. You will save time and money by not having to pay for other transcription services.

Top AI voices

Here are the top popular AI voices, according to ElevenLabs

Brian (American accent) is the top choice in the US

George (British accent) is preferred in the UK and Australia

Jessica (Portuguese) for Brazil listeners

David (Portuguese) for European Portuguese listeners

Why does this matter?

If you're creating English content for the US, UK, and Australia, using Brian or George AI voices may improve your likelihood of success. However, to create a unique experience, deviate from the norm and use a different voice for your content.

An example of Brian’s voice:

Other news

Ideogram 2a has been released. It is said to be faster than its predecessor while costing half as much. Ideogram has also released a minor update to Canvas, which now allows for image rotation.

Inspiration

Printmaking techniques are methods for producing multiple copies of artwork by transferring an image from a matrix to another surface, such as fabric or paper. An article discusses nine vintage printmaking techniques used by artists to create specific aesthetic styles: etching, woodcut, lithography, engraving, drypoint, mezzotint, aquatint, and monotype.

I used these terms as keywords to create the artworks shown below in Midjourney. The results are surprising because the bot did not strictly follow the prompt to achieve the desired aesthetics.

The majority of the generated images lack a vintage appearance and are colorful. However, the keywords had some visible effects on the generated output, especially the "woodcut" illustration. The --style raw parameter helps to reinforce the aesthetic effect of the printmaking keywords, but only to a certain extent.

Based on this small experiment, I concluded that keywords alone may not be sufficient to replicate the vintage effects of printmaking reliably. Other options should be considered, such as combining keyword + Sref or Moodboard code.

Recent articles

Free Geeky Animals' Sref code: 1602835718

Cover prompt: A half-human, half-machine figure witnessing the miracle of life, as green leaves emerge from the glowing screen of an old computer, ethereal lighting, intricate textures --ar 16:9 --sref 1602835718 --v 6.1

Invitation to publish on Creativity AI

Please contact me if you want to publish on Creativity AI.

This publication will be distributed through Substack and Medium platforms, reaching thousands of readers.

Email: animalsgeeky@gmail.com